Probabilistic Machine Learning - Notes

Notes on the "Probabilistic Machine Learning" Lecture by Prof. Dr. Philipp Hennig from University of Tübingen Germany) 2020 / 2021. Lectures on YouTube © Philipp Hennig / University of Tübingen, 2020 CC BY-NC-SA 3.0. Lots of additional details can be found in the freely available book Gaussian Processes for Machine Learning by Rasmussen & Williams (2006). I personally also found the Statistics 260 Lecture by Michael Jordan at Berkeley EECS helpful, which I last checked 11-10-2022 (europ.).

Latest Changes: (europ.).

Notation

- External links are formatted as links and cross references are formatted as crossrefs. Other emphasized parts are emphasized.

- Note that lots of the rules for events on a probability space get generalized to random variables without further discussion, which can be found in introductory stochastic books. Additionally the notation of probability measures is often "abused", meaning shortened, by assuming that it is clear which part belongs to which random variable etc.

- If not stated otherwise $\Omega$ represents the "base"-set of some measurable space and $\Sigma$ represents the $\sigma$-algebra.

- Given a set of Variables $x_i, \ i\in I\subset \mathbb N$ the full "vector" of all $I$ variables is simply denoted by $x = (x_1,x_2, \dots)$. Subsets of this vector connected to subsets $K$ of $I$, i. e. $K\subset I$ are then denoted by $x_{K}$. Also $x_{I\setminus K}$ denotes the collection of all $x_i$ except the ones for $i \in K$.

- If not stated otherwise $A^C$ represents the complement relative to "base"-set of some measurable space $\Omega$, i. e. $A^C := \Omega\setminus A$.

- Probabilities of intersections will get abbreviated especially for random variables:

\begin{align*} P(A, B) := P(A\cap B) \, . \end{align*}

- If not stated otherwise $B_1,B_2,...\in \Sigma$ denotes a finite or countable infinite family of sets.

- If a random variable is called e. g. $X$, then the sufficient space on which it lives (mostly it will be some dimensional $\mathbb R^d$) gets shortly denoted by $\mathbb X$.

- The indicator function is used as

\begin{align*} 1_A(x) = \begin{cases} 0, & x\not \in A \\ 1, & x \in A \end{cases} \, . \end{align*}

- The Identity Matrix is denoted by a boldsymbol $\boldsymbol 1$ without further denoting the dimensions.

- Given a probability distribution $\mathcal P$ with parameters $\theta$, e. g. the normal distribution

$\mathcal N(\mu, \sigma^2)$ or the uniform distribution $U(a,b)$ and a random variable distributed accordingly

$X\sim \mathcal P(\theta)$. Then the density is denoted as

\begin{align*} P(X=x) =p(x) = \mathcal P(x; \theta)\, . \end{align*}

- The notation $\constwrt{x}$ represents terms which are constant with respect to (wrt.) $x$.

- If space is an issue, determinants of matrices get denoted as $\vert A \vert$. Elsewhere $\det A$ is used for clarity.

The Toolbox

Throughout the lecture and these notes we keep track of a "Toolbox" for Modelling- and Computation-Techniques. Directed Graphical Models are the first entry:

| Modelling Techniques | Computation Techniques |

Preface: A Short Primer on Measure Theory

- This short preface is not completely included in the actual lecture but there are notations from measure theory used, which are briefly introduced here, of course without going into details of topology (because of the authors incapacity to do so).

- $\boldsymbol\sigma$-Algebra / $\boldsymbol\sigma$-Field: Let $\Omega$ be some set and let $\mathcal P(\Omega)$ represent its power set. Then a subset $\Sigma \subset \mathcal P(\Omega)$ is called a $\sigma$-algebra if it satisfies the following three properties:

- $\Omega \in \Sigma$.

- Closeness under complementation: $A \in \Sigma \quad \Rightarrow \quad \Omega \setminus A$ is in $\Sigma$.

- Closeness under countable unions: $A_1, A_2, ...\in \Sigma \quad \Rightarrow \quad \bigcup A_i \in \Sigma$.

- Additional Properties of $\boldsymbol\sigma$-Algebra: The following properties can be derived from the three parts of the definition above:

- $\emptyset \in \Sigma$ for every $\sigma$-algebra $\Sigma$.

- $\bigcap A_i \in \Sigma$.

- Measurable Space: The pair $(\Omega, \Sigma)$ is called a measurable space and the elements of $\Sigma$ are called measurable sets.

- Measure: Let $\Omega$ be a set and $\Sigma$ a $\sigma$-algebra over $X$. A set function $\mu: \Sigma \to \mathbb R \cup \{-\infty, \infty\}$ is called a measure if it satisfies:

- Non-negativity: $\mu(A) \geq 0$ for all $A\in \Sigma$.

- Null empty set: $\mu(\emptyset) = 0$.

- Countable additivity ($\sigma$-additivity): For $A_1,A_2,...\in \Sigma$ pairwise disjoint ($A_i

\cap A_j =\emptyset $ if $i\neq j$) $\mu$ holds:

\begin{align*} \mu\left(\bigcup_{k=1}^\infty A_k \right) = \sum_{k=1}^\infty \mu(A_k)\, . \end{align*}

- Measure Space: The triplet $(\Omega, \Sigma, \mu)$ is called a measure space.

- "Measure Integral"-Notation: Given a measure space and $f: \Omega \to \mathbb R^d$. Then the following notation gets used to "measure" the function:

\begin{align*} \int_\Omega f(y) \, \mathrm d \mu(y)\, \cong \int_\Omega f(y)\mu(y)\, \mathrm dy\, . \end{align*}

- E. g. lets look at the Lebesgue Measure, which resembles the "classical" known integration technique on $\mathbb R^d$. Let $A \subset \mathbb R$. Then the Lebesgue measure $\lambda(A)$ is the Infimum of the summed lengths of all sequences of open subsets of $\mathbb R^d$ which contain $A$. Therefore at integration time one multiplies the function $f$ with a length of an interval, which leads to something really close to the known Riemann Integral from calculus.

- The arguably easiest measure to execute on a test function $f$ is the Dirac Measure: $\delta_x(A) = 1_A(x)$ such that:

\begin{align*} \int_\Omega f(y) \, \mathrm d\delta_x(y) = \int_\Omega f(y) \delta_x(y)\, \mathrm dy = f(x) \end{align*}

where in the second formulation the $\delta$-function has been used (which is not actually a function.)

Probability Theory

- Probability Measure: Let $(\Omega, \Sigma)$ be a measurable space. A probability measure $P$ is a measure with total measure $1$ meaning

\begin{align*} P(\Omega) = 1\, . \end{align*}

- Probability Space: A triplet $(\Omega, \Sigma, P)$ is called a probability space. $\Omega$ can then be interpreted as the set of all possible outcomes (atomic events) of an experiment. $A\in \Sigma$ can be interpreted as an event being a set of possible outcomes.

- A probability measures can be uniquely defined by defining $P(\omega)$ for all atomic events $\omega \in \Omega$.

- Additional Properties of Probability Measures: The definition of a probability measure implies the following:

- Intersections and Unions:

\begin{align*} P(A\cup B) = P(A) + P(B) - P(A\cap B)\, . \end{align*}

- Complements:

\begin{align*} P(A) = 1-P(A^C)\, . \end{align*}

- Let $B_1,B_2,...\in \Sigma$ pairwise disjoint, with $\bigcup B_i = \Omega$. Then

\begin{align*} P(A) = \sum_i P(A \cap B_i)\, . \end{align*}

- Conditional Probability: Let $A, B \in \Sigma$. Then the conditional probability of $A$ given $B$ is defined as

\begin{align*} P(B\vert A) := \frac{P(A, B)}{P(B)} \end{align*}

- This immediately yields the product rule:

\begin{align*} P(A, B) = P(A\vert B) \cdot P(B) = P(B\vert A) \cdot P(A)\, . \end{align*}

- Note that for multiple conditional probabilities we also have:

\begin{align*} P(A\vert B, C) = \frac{P(A, B, C)}{P(B, C)}\, . \end{align*}

- Law of Total Probability: Let $B_1, B_2, ... \in \Sigma$ ($n$ might be infinity) be a set of pairwise disjoint events with $\bigcup_iB_i=\Omega$. Then for each event $A$ it holds:

\begin{align} P(A) = \sum_i P(A\cap B_i) = \sum_n P(A\vert B_i)P(B_i) \, . \label{eq:total_prob} \end{align}

- Chain Rule of Probability: Let $A_i \in \Sigma$, $i=1,...,n$ be a set of events, then

\begin{align} P \left( \bigcap_{i=1}^n A_i \right) = \prod_k P\left( A_k \Big{\vert} \bigcap_{j=1}^{k-1}A_j \right) = P(A_n\vert A_{n-1} \cap ... \cap A_1) \cdot P(A_{n-1}\vert A_{n-2} \cap...\cap A_1) \cdot ... \cdot P(A_1) \label{eq:chain_prob} \end{align}

- Bayes Theorem and Bayesian Modelling - Using data $D$ and latent variable $X$ one denotes:

\begin{align} \underbrace{P(X\vert D)}_{\textsf{posterior for $X$ given $D$}} = \frac{\overbrace{P(D\vert X)}^{\textsf{likelihood for $X$}} \cdot\overbrace{P(X)}^{\textsf{prior for $X$}}}{\underbrace{P(D)}_{\textsf{evidence for the model}}} = \frac{P(X) \cdot P(D\vert X)}{\displaystyle\sum_{x\in \mathrm{supp}(X)} P(X)\cdot P(D\vert X)} \, . \label{eq:bayes} \end{align}

- When found a posterior distribution of the model parameters one can use this distribution to sample sets of parameters. Using these sampled parameters one can go on to sample additional data-predictions which are drawn from the predictive distribution $P(D\vert X)$ for given parameters $X$. Iterating these steps lead a so called Posterior Predictive Function for new data. This might be necessary, if the posterior integral is intractable.

- Bayes Theorem implications:

- Constant Likelihoods do not provide any information.

- A very unlikely hypothesis can become dominant if it is the only one explaining the data well.

- No data can revive an a priori impossible hypothesis.

- Additional evidence may force you to reconsider your prior.

- The hypothesis space has to contain some explanation for the data.

- The $\sigma$-algebra not the exact choice of $P(D)$ is often the most important prior assumption.

- Probabilistic reasoning is a mechanism, it does not replace creativity.

Random Variables

- When moving from boolean logic to probability one has to take care of the probability of all atomic events, which already for simple examples need huge amount of memory $(2^{26}-1$ floats for "storing" the alphabet).

- Being uncertain is potentially much more expensive in terms of computation and memory than simply committing to a single hypothesis. This is the key challenge of probabilistic reasoning in practice.

- Inverse Image: Let $X:\Omega \to \X$. The preimage or inverse image of a set $B\subset \X$ under $X$, denoted by $X^{-1}(B)$ is the subset of $X$ defined by

\begin{align*} X^{-1}(B) = \big\{ \omega \in \Omega : X(\omega) \in B \big\} \, . \end{align*}

- Measurable Functions and Random Variables: Let $(\Omega, \Sigma)$ and $(\X, \Xi)$ be two measurable spaces. A function $X:\Omega \to \X$ is called measurable if $X^{-1}(B) \in \Sigma$ for all $B \in \Xi$. If there is, additionally, a probability measure $P$ on $(\Omega, \Sigma)$, then $X$ is called a random variable.

- Distribution Measure: Let $X:\Omega \to \X$ be a random variable. Then the distribution measure (or law) $P_X$ of $X$ is defined for any $B \in \Xi$ as

\begin{align*} P_X(B) = P(X\in B) = P\left( X^{-1}(B) \right) = P\big( \{\omega \, \vert \, X(\omega)\, \in B\} \big)\, . \end{align*}

- Support of a RV: The support of a RV is given by the set of elements of $\X$ where the probability of

$X$ is not zero:

\begin{align*} \mathrm{supp}\, X = \big\{ x\in \X \ \vert \ P_X(\{x\}) \neq 0 \big\}\, . \end{align*}

Note that $\X$ can sometimes be already defined as being equal to $\mathrm{supp}\, X$ because points with no probability are irrelevant for modelling.

- Abusing Notations: This is where the abuse of notation comes in. When $X$ represents a random variable $P(X)$ implicitly represents the distribution measure of $X$. This is also used for defining "junctions" and "unions" of random variables: For junctions of random variables the following notation is used:

\begin{align*} P(X, Y) = P(X\in B, Y \in C) = P\Big( \{\omega \, \vert \, X(\omega)\, \in B\} \cap \{\omega \, \vert \, Y(\omega)\, \in C\} \Big) \, . \end{align*}

- Independence: Using the definitions from above one can apply the concepts of conditional (in)dependence directly to RVs. Two RVs $X$ and $Y$ are therefore called independent if

\begin{align*} P(X, Y) = P(X)\cdot P(Y)\, . \end{align*}

- Joint and Marginal Distributions: The joint distribution of multiple RVs $X=(X_1,X_2, \dots)$ where the indices are in $I\subset \N$ is

\begin{align*} P(X) = P(X_1,X_2, \dots)\, . \end{align*}The marginal distribution of a subset $K\subset I$ is given by integrating out all other variables:\begin{align*} \mathcal P (x_K) = \int \mathcal P (x) \, \d x_{I\setminus K} \end{align*}

- Conditional Independence: Two RVs $X$ and $Y$ are conditionally independent given RV $Z$, iff their conditional distribution factorizes:

\begin{align*} P(X, Y \vert Z) = P(X\vert Z) \cdot P(Y\vert Z) \quad \overset{(I)}{ \Rightarrow} \quad P(X \vert Y, Z) = P(X \vert Z) \end{align*}

i. e. "given information about $Z$, $Y$ does not provide any further information about $X$." A common notation is:

- Calculation of the implication $(I)$: Let $X, Y, Z$ be RVs with $P(X, Y \vert Z) = P(X\vert Z) \cdot P(Y\vert Z)$. Then:

\begin{align*} P(X\vert Y, Z) = \frac{P(X, Y, Z)}{P(Y, Z)} = \frac{P(X, Y, Z)}{P(Z)} \cdot \left( \frac{P(Y, Z)}{P(Z)} \right)^{-1} = P(X, Y\vert Z) \cdot \frac{1}{P(Y\vert Z)} = P(X\vert Z)\, . \end{align*}

- Independence (Assumptions) can help tremendously to simplify probabilistic models (Burglary, Alarm, Earthquake example from the lecture)

- Inference in the Bayesian framework consists of:

- Identify all relevant variables.

- Define the joint probability for the generative model

- Mechanically using Bayes Theorem and computing marginals.

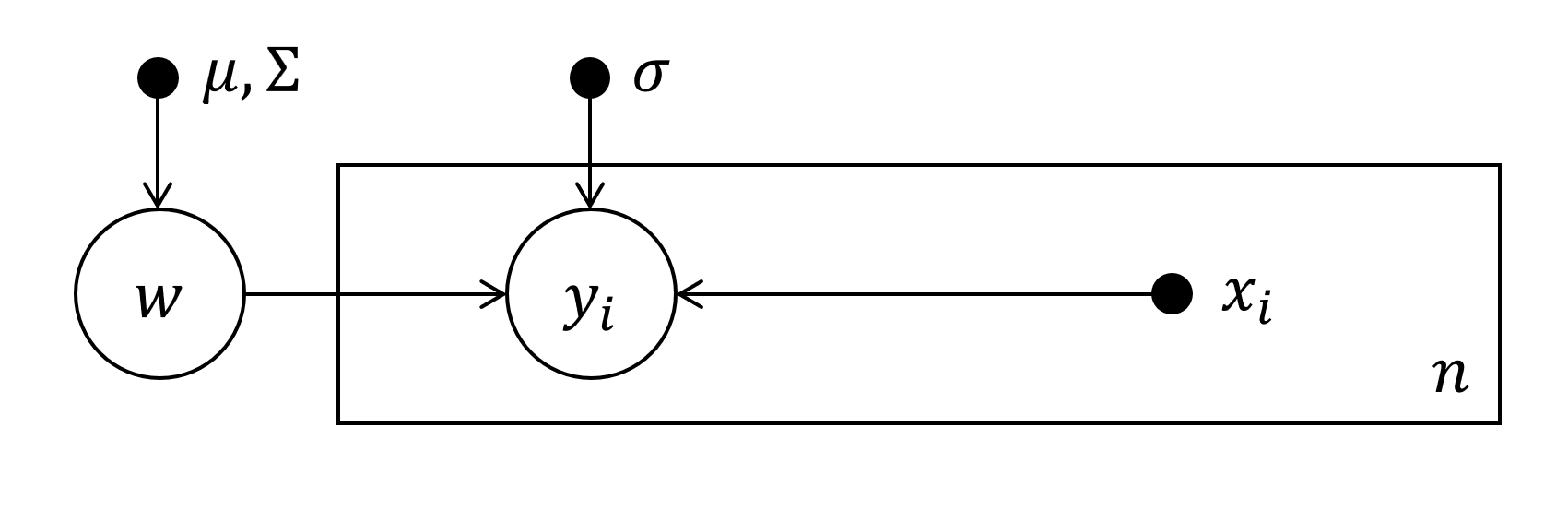

- Directed Graphical Models: Directed Graphical Models are models of probabilistic settings where the factorization of a joint probability can be represented by a Directed Acyclic Graph (DAG). To present these a pictorial view is helpful and can be found in the lecture.

- Directed Graphical Model (DGM) aka. Bayesian network: A DGM is a probability distribution over variables

$\{X_1,\dots , X_D\}$ that can be written as

\begin{align*} P(X_1, X_2, \dots X_D) = \prod_{i=1}^D p(X_i\vert \mathrm{pa}(X_i)) \, , \end{align*}where $\mathrm{pa}(X_i)$ are the parental variables of $X_i$. A DGM can be represented by a Directed Acyclic Graph (DAG) with the propositional variables as nodes and arrows from parents to children.

- An example of such a model in a pictorial view is:

In this case e. g. $\mathrm{pa}(D) = \{ A, B, C \}$ and $\mathrm{pa}(A) = \emptyset$. The full model becomes:

\begin{align*} P(A, B, C, D) = P(D\vert A, B, C)\cdot P(C\vert B, D)\cdot P(A)\cdot P(B) \end{align*}

- Note that the arrows indicate conditional dependence not causality. The independence structure might often be more nuanced, than the DAG suggests.

- There is more to follow on DGMs later.

\begin{align*}

X \indep Y \vert Z\, .

\end{align*}

| Model | Computation Technique |

| Directed Graphical Models (representable by a DAG) |

Continuous Random Variables

- To model continuous random variables one need continuous spaces as $\Omega$ such as $\Omega = \mathbb R^d$. In such spaces it can be shown that not all sets are measurable. To resolve this problem on uses the notion of Topologies which resemble $\sigma$-fields and introduces the so called Borel Algebra which serves as a $\sigma$-algebra for probability modelling.

- Topology: Let $\Omega$ be a space and $\tau$ be a collection of sets. $\tau$ is called a topology on $\Omega$ if

- $\Omega \in \tau$ and $\emptyset \in \tau$.

- Any union of elements of $\tau$ is in $\tau$.

- Any intersection of finitely many elements of $\tau$ is in $\tau$.

- Borel Algebra: Let $(\Omega, \tau)$ be a topological space. The Borel $\sigma$-algebra is the $\sigma$-algebra generated by $\tau$. That is by taking $\tau$ and completing it to include infinite intersections of elements from $\tau$ and all components in $\Omega$ to elements of $\tau$. The Borel algebra sometimes gets denoted by $\mathcal B$ and indeed $(\mathbb R^d, \mathcal B)$ is yet again, a measurable space.

- Probability Density Function (PDF): Let $P$ be a probability measure on $(\mathbb R^d, \mathcal B)$.

$P$ ha the density $p$ if $p$ is a non-negative (Borel-) measurable function on $\mathbb R^d$ satisfying

\begin{align*} P(B) = \int_B p(x)\, \mathrm dx = \int_B p(x)\, \mathrm dx_1\dots \mathrm dx_d \quad \forall \quad B\in \mathcal B\, . \end{align*}

- Cumulative Distribution Function (CDF): For probability measure $P$ on $(\mathbb R^d, \mathcal B)$ the cumulative distribution function is the function

\begin{align*} F(x) = P\left(\prod_{i=1}^d (X_i < x_i)\right )\, . \end{align*}If $F$ is sufficiently smooth, then $P$ has a density, given by\begin{align*} p(x) = \partial^d F\vert_x := \frac{\partial^d F}{\partial x_1 \dots \partial x_d} \Big \vert_x\, . \end{align*}

- Rules for Continuous Random Variables: The rules and notations of discrete random variables can be transferred to continuous random variables mainly by transferring sums to integrals:

- For probability densities $p$ on $(\mathbb R^d, \mathcal B)$ is always holds:

\begin{align*} P(\mathbb R^d) = \int_{\mathbb R^d} p(x) \, \mathrm dx = 1 \end{align*}

- Let $X=(V, W)$ be a random variable with density $p_X$. Then the marginal density of $V$ (analogous for $W$) is given by the sum rule:

\begin{align*} p_V(v) = \int_{\mathbb W} p_X(v, w)\, \mathrm dw\, . \end{align*}

- The conditional density $p(x\vert y)$ (for $p(y) > 0$) is given by the product rule and can be rewritten using Bayes Theorem:

\begin{align*} p(x\vert y) = \frac{p(x, y)}{p(y)} = \frac{p(x)\cdot p(y\vert x)}{\int_{\mathbb X} p(x) \cdot p(y\vert x)\, \mathrm dx}\, . \end{align*}

- Transformation Theorem for PDFs (Omitting some mathematical constraints): Let $X$ be a RV with a PDF $f^X(x)$ and let $\Phi$ be a differentiable mapping. Let $\mathrm D\Phi$ be the Jacobian of $\Phi$ and let the RV $Y$ be $Y = \Phi(X)$ with PDF $f^Y(y)$. Then

\begin{align*} f^Y(y) = \frac{f^X\big( \Phi^{-1} (y)\big)}{\Big \vert \mathrm D \Phi \big( \Phi^{-1}(y) \big) \Big \vert} \, . \end{align*}

- A special and very easy case for this is the Convolution of two RVs. Let $X$ and $Y$ be two independent RVs with PDFs $f^X$ and $f^Y$ and $Z = X+Y$ which yields:

\begin{align*} P(Z=z) = \int_\mathbb R f^X(z-y)f^Y(y) \, \mathrm dy \end{align*}

- Example of a probabilistic inference scheme: For the proportion of people wearing glasses given a sample $X$ and using a uniform prior for the true probability $\pi$ for wearing glasses and

\begin{align*} P(X_i = 1\vert \pi)=\pi \ \ \Rightarrow \ \ P(X_i=0\vert \pi)=1-\pi \, , \end{align*}which leads for a Beta-Distribution for the Posterior:\begin{align*} P(\pi \vert X) = B(n-1,m-1)^{-1}\pi^n(1-\pi)^m\, , \end{align*}when observing a sample with $n$ positive results (wearing glasses) and $m=N-n$ negative results. This actual results for all $\beta$-distributed priors.

- Note that Discrete RVs can be viewed as a special case of Continuous RVs using the Dirac-Measure. A Discrete RV $X$ with $P(X=x) = p_x$ can be described as a continuous RV using the PDF:

\begin{align*} \mathcal P(x) = \sum_{y \, \in\, \mathrm{supp}\, X} p_y \delta(x-y) \, . \end{align*}For this reason sometimes when denoting properties of RVs in general, one sometimes sticks to using the integral notation for continuous RVs. This can also be interpreted in context of measure theory.

Expectations

- Expectation of a Function: Given some RV $X \in \X$ with PDF $\mathcal P(x)$. The Expectation of a function $f: \X \to \mathbb F$ is given by:

\begin{align*} \E_\mathcal P [f] := \int f(x) \, \d \mathcal P(x) = \cases{ \displaystyle\int f(x)\mathcal P(x) \, \d x , & \textsf{continuous RV} \\ \displaystyle\sum_{x\, \in \, \mathrm{supp}\, X} f(x)\mathcal P(x), & \textsf{discrete RV} } \, . \end{align*}Another notation for expectation is using angle-brackets:\begin{align*} \langle f\rangle_\mathcal P = \E_p[f] \, . \end{align*}

- Mean: The Mean is the expectation of the identity function $x\to x$:

\begin{align*} \E[X] = \int x \, \d \mathcal P(x) \, . \end{align*}

- Variance: The Variance is the expectation of the quadratic deviation from the mean:

\begin{align*} \mathrm{Var}\, X = \E\left[(X-\E X)^2\right] =\dots = \E\left[X^2\right] - \E X^2 \, . \end{align*}

- Moments: The $p$-th moment of a RV $X$ is the expectation $\E[x^p]$.

- Entropy: The entropy is the expectation of $f(x)=-\log x$.

Monte Carlo Method

- Monte Carlo Method: Idea: draw a "sample" (or just "sample") a number of $S$ values for $x_s\sim p(x)$ to solve:

\begin{align*} \int f(x)p(x) \, \mathrm d x \approx \frac{1}{S}\sum_{s=1}^S f(x_s) \quad \textsf{and} \quad \int p(x,y) \ \mathrm dx \approx \sum_s p(y\vert x_s )\, . \end{align*}

- Let $\phi = \int f(x)p(x) \, \mathrm d x = \mathbb E_p [f]$. let $x_s \sim p, \ s=1,..., S$ be iid. Then the

Monte Carlo estimator for $\phi$ is given by:

\begin{align*} \hat \phi = \frac{1}{S} \sum_{s=1}^S f(x_s)\, . \end{align*}

- The Monte Carlo estimator is an unbiased estimator, meaning $\mathbb E [\hat \phi] = \phi$.

- The variance of the MC-Estimator drops as $\mathcal O(S^{-1})$:

\begin{align*} \var \hat \phi = S^{-1}\mathrm{Var}(f) \, . \end{align*}

- To achieve really high precision in terms of the standard deviation $\sigma_{\hat \phi} = \sqrt{\mathrm{Var}(\hat \phi)}$ one needs the doubled order of magnitude in sample size, which makes plain MC estimation pretty inefficient.

Sampling by Transformation

- Still the open question remains: How to generate random samples from $\boldsymbol{p(x)}$?

- One can sample from the uniform distribution $U(0,1)$ pretty decent computationally using Pseudorandom Number Generators.

- Sampling by Transformation: Given that $U \sim U(0,1)$ and $X\sim P_X$ with CDF $F(x)$, then $F^{-1}(U)$ is distributed according to $P_X$.

- Example: The exponential distribution $\mathrm{Exp}(\lambda)$ for which $F(x; \lambda) = P(X \leq x;

\lambda) = 1 - e^{-\lambda x}$ with $F^{-1}(y; \lambda) = -\log (y) / \lambda$:

\begin{align*} P\big (F^{-1}(U; \lambda) \leq u\big ) &= P\left( -\frac{\log U}{\lambda} \leq u \right) = P \left( U \geq e^{-\lambda u} \right) = 1 - P \left( U < e^{-\lambda u} \right) \\ &=1 -\int_0^{e^{-\lambda u}} 1\,\mathrm d t=1 - e^{-\lambda u} \, . \end{align*}

Rejection Sampling

- But what to do if we do not know a good transformation?

- What makes sampling hard, is that we need to know the cumulative density everywhere, i.e. a global description of the entire function.

- Practical Monte Carlo Methods aim to construct samples from $p(x) = \tilde p(x) / Z$ assuming that it is possible to evaluate the unnormalized density $\tilde p$ at arbitrary points. Typical example: Compute moments of a posterior:

\begin{align*} p(x\vert D) = \frac{p(D\vert x)p(x)}{\int P(D, x)\mathrm d x} \qquad \textsf{as} \qquad E_{p(x\vert D)}[x^n] \approx \frac{1}{S}\sum_S s_i^n \quad \textsf{with} \ x_i\sim p(x\vert D) \, . \end{align*}

- One possibility to realize that is Rejection Sampling: We want so sample from $p(x) = \tilde p(x) / Z$ which is given up to a constant $Z$. Therefore choose $q(x)$ s. t. $cq(x) \geq \tilde p(x)$ for a fixed $c>0$ and draw $s \sim q(s)$ and $u \sim U(0, cq(s))$. Then accept $s$ to the sample if $u \leq \tilde p(s)$ and reject if $u > \tilde p(s)$.

- This works because:

\begin{align*} P(s\vert u \leq \tilde p (s)) = \frac{P(s, u\leq \tilde p(s))}{P(u\leq \tilde p(s))} = \frac{P(u\leq \tilde p(s) \vert s)P(s)}{P(u\leq \tilde p(s))} = \frac{\tilde p(s)}{cq(s)} \left( \int q(t)\frac{\tilde p(t)}{cq(t)} \, \mathrm dt \right)^{-1} q(s) = p(s)\, . \end{align*}

- Rejection sampling gets very inefficient, if large values of $c$ are needed - especially in higher dimensions (see gaussian example where $c=(\sigma_q / \sigma_p)^D$.

Importance Sampling

- For the next method the Expectation Rewrite-Trick is needed. Suppose a PDF $p(x)$ and a function

$f(x)$ with the expectation $\mathbb E_p[f] = \int f(x)p(x) \, \mathrm dx$ (works also in the discrete case) is to be calculated. Assume another PDF $q(x)$. Then this can be rewritten:

\begin{align*} \mathbb E_p[f] = \int f(x)p(x) \, \mathrm dx = \int q(x) \cdot f(x)\frac{p(x)}{q(x)} \, \mathrm dx = \mathbb E_q \left[ f\cdot \frac{p}{q} \right] \end{align*}

- The latter expectation $\mathbb E_q$ can then be estimated using e.g. MC sampling, meaning reducing it to a sum: $\mathbb E_q[h] \approx S^{-1}\sum_s h(x_s), \ x_s \sim q(x)$.

- An improved version of Rejection Sampling is Importance Sampling: Assume $q(x) > 0$ if $p(x) >0$. Then use the following estimator for a function $f$ (which is the goal of sampling):

\begin{align*} \phi &= \int f(x)p(x) \, \mathrm dx = \frac{1}{Z} \int f(x) \frac{\tilde p(x)}{q(x)}q(x) \, \mathrm dx \overset{\textsf{MC}}{\approx} \frac{1}{S} \sum_s f(x_s) \frac{\tilde p(x_s)/q(x_s)}{\frac{1}{S}\sum_{s'} \tilde p (x_s) / q(x_s) } \\ &=: \sum_s f(x_s) \tilde{w}_s, \quad x_s \sim q(x) \end{align*}

- Note that with unknown $Z$ this is not an unbiased estimator anymore. To estimate $Z$ we used the expectation rewrite-tick on $f=1$ which yields $1 = \mathbb E_p[1] = \mathbb E_q [ p / q ] = E_q[ \tilde p / q] \cdot Z^{-1}$ which ca easily be solved for $Z$. If $Z$ is known this formula can be further simplified leading to replace $\tilde w_s$ with $w_s = p(x_s) / q(x_s)$.

- A flaw of importance sampling is, that $\mathrm{Var}(f\cdot p/q)$, which is important for the convergence rate of this procedure, can be unbounded, because $p(x)/q(x)$ might get very big for small $q(x)$.

Markov Chain Monte Carlo Methods

- Definition of Markov Chains: Let $X$ be a sequence of RVs with a joint distribution $p(x_1, ..., x_N)$. Then this sequence is called a Markov chain, iff the joint PDF obeys the Markov property, i.e.

\begin{align*} p(x_i \vert x_1,...,x_2,...,x_{i-1}) = p(x_i\vert x_{i-1})\, . \end{align*}

- The idea of Markov Chain Monte Carlo (MCMC) sampling is to instead of drawing independently from $p$ to draw conditional on the previous example. This might enable us to "concentrate" on regions of $p$ with high probability and therefore leading to less "rejections" in the image of rejection sampling, i.e. it should be more likely to draw samples from areas where $p(x)$ is high.

- An algorithm must the Detailed Balance Condition: The detailed balance condition states that the algorithm reaches a stationary point of the process of drawing random numbers conditionally. It is:

\begin{align*} p(x)T(x\to x') = p(x')T(x' \to x)\, , \end{align*}where $T(x_1\to x_2)$ is the probability of going from $x_1$ to $x_2$. This is sufficient to assume, that if once $p(x)$ is "reached", all following samples will also be from $p(x)$:\begin{align*} \int p(x) T(x\to x')\, \mathrm dx = \int p(x')T(x\to x') \, \mathrm dx = p(x') \int T(x'\to x) \, \mathrm dx = p(x')\, . \end{align*}In the last step we use, that the transition probability must integrate to $1$ (i.e. some point must be reached).

The Metropolis-Hastings Method

- The Metropolis-Hastings Method: We want to find samples of an (unnormalized) distribution $\tilde p(x)$. Therefore one can follow the following procedure:

- Given a current sample instance $x_t$, draw a proposal $x'\sim q(x' \vert x_t)$ from a distribution $q$ - e.g. $q(x'\vert x_t) = \mathcal N (x';x_t, \sigma^2)$.

- Evaluate the quotient

\begin{align*} a(x', x_t) := \frac{\tilde p(x')}{\tilde p(x_t)} \cdot \frac{q(x_t \vert x')}{q(x'\vert x_t)}\, . \end{align*}

- If $a \geq 1$ accept: $x_{t+1} \leftarrow x'$.

- Else:

- Accept with probability $a$: $\quad x_{t+1} \leftarrow x'$

- Stay with probability $1-a$: $\quad x_{t+1} \leftarrow x_t$

- To show that the detailed balance condition holds for this algorithm plug in

\begin{align*} T(x \to x') = q(x'\vert x) \min \big\{ 1, a(x', x)\big\} \end{align*}

into the detailed balance condition and use that $y \cdot \min\{a,b\} = \min\{ya, yb\}$ if $y > 0$.

- There exist mathematical arguments for existence and uniqueness of the stationary distribution of an MCMC.

- Mixing Problem: Choosing the parameter $\sigma$ of $q$ is not trivial and there is a tradeoff between lots of acceptances and coverage of the complete probability mass of $p$.

- In practice MCMCs are use local operations so they do not have to deal with this problem too much. Therefore the local behavior has to be tuned. One algorithm to address this problem is Gibbs Sampling:

Gibbs Sampling

-

Gibbs Sampling: In Gibbs Sampling one assumes that for a multivariate RV $x=(x_1,...,x_n)$ there exists index sets $I \subset \{1,...,n\}=:N$ for which:

\begin{align*} x_t \leftarrow x_{t-1}; \quad x_{t,I} \sim p(x_{t, I} \vert x_{t, N\setminus I})\, . \end{align*}The algorithm then looks like:

- Given a current sample instance $x_t$, draw a proposal $x'\sim q(x' \vert x_t)$ from

\begin{align*} q(x'\vert x_t) = \delta(x'_{N\setminus I} - x_{t, N\setminus I})\cdot p(x_i'\vert x_{t, N\setminus I}) \end{align*}

- Use the assumption about $x_{t, I}$ to calculate

\begin{align*} p(x') = p(x_I'\vert x'_{N\setminus I}) \cdot p(x_{N\setminus I}')=p(x_I'\vert x_{t, N\setminus I}) \cdot p(x_{t, N\setminus I}) \, , \end{align*}where the latter equality holds because we only update $x_I$ in this step.

- Plugging everything in the formula for $a$ of the Metropolis-Hastings algorithm and using the properties of the $\delta$-distribution $\delta(a-b)=\delta(b-a)$ one observes that $a=1$ always holds, thus the update is always executed.

- Continue with the rest of $N\setminus I$ and add $x'$ to the sample when all $N$ indices have been updated.

- Given a current sample instance $x_t$, draw a proposal $x'\sim q(x' \vert x_t)$ from

- In practice this resembles updating all the dimensions after each other and adding a new point to the sample when every dimension has been updated. To work well, it is necessary, to align the PDF as much to the axes as possible.

- Gibbs sampling will later be applied to Latent Dirichlet Allocation.

Hamiltonian Monte Carlo Methods

- Hamiltonian Monte Carlo (HMC): Hamiltonian Monte Carlo Methods aim - like Gibbs Sampling - at modelling the problem in such a way, that $a=1$. There is some amount of theory necessary to understand it though. Looking at the situation through the lens of physics what is actually done can be interpreted as "moving" $x$ through the space as it was driven by a potential energy which is to be constructed from the $p(x)$:

- Lets consider Boltzmann distributions, i.e. distributions which can be written as

\begin{align*} P(x) = \frac{1}{Z} \exp \big( -E(x)\big) \qquad \textsf{with a normalizing constant $Z$} \end{align*}

which is actually a rather weak assumption because using $\log(.)$ lots of distributions can be rewritten in this way.

- Next augment the state-space by an auxiliary momentum variable $p = \dot x$ and define a

Hamiltonian, which resembles the sum of potential and kinetic energy, via:

\begin{align*} H(x, p) := E(x) + K(p) \qquad \textsf{with e.g.}\ K(p) = \frac{1}{2}p^Tp \, . \end{align*}The case $K(p)=p^Tp/2$ resembles classical, non-relativistic kinetic energy. Note that $K(p)$ must only be dependent of quadratic terms in $p$ (i.e. $p^Tp$) for the dynamics to be time-reversible.

- Now perform Metropolis-Hastings procedures to the joint distribution of $p$ and $x$ which is given by

\begin{align*} P_H(x,p)=\frac{1}{Z_H} \exp\big(-H(x,p)\big) = \frac{1}{Z_H} e^{-E(x)}\cdot e^{-K(p)} \end{align*}and use that the laws of Hamiltonian Mechanics provide a coupling between $p$ and $x$ given by the Hamilton Equations namely:\begin{align*} \dot x = \frac{\partial H}{\partial p} \, , \qquad \textsf{and} \qquad \dot p = - \frac{\partial H}{\partial x}\, . \end{align*}This assures that $H$ is actually a constant w.r.t. time:\begin{align*} \frac{\mathrm dH}{\mathrm dt} = \frac{\partial H}{\partial x} \frac{\partial x}{\partial t} + \frac{\partial H}{\partial p}\frac{\partial p}{\partial t} = 0 \, . \end{align*}This assures that $\boldsymbol{P_H(x',p')=P_H(x,p)}$ for the Metropolis-Hastings procedure when interpreting the Markov Chain steps as time steps.

- The proposal-part of the algorithm is thereby practically omitted, because generating a sample $(x', y')$ by time propagation given by $H$ is already the proposal of a new point. The dynamics of such a system are time-reversible (see here), which resembles the Detailed Balance Condition to be fulfilled.

- Because the distribution $P_H(x,p)$ factorizes to $P_H(x,p) = P_E(x)P_K(p)$ we are now able to sample from

$P_E(x)=p(x)$ with accepting each proposal by the cost of solving an ordinary differential equation (ODE) of the first order namely

\begin{align*} \frac{\mathrm d}{\mathrm dt} \begin{pmatrix} x(t) \\ y(t) \end{pmatrix} = \begin{pmatrix} p(t) \\ -\nabla_x E(x(t)) \end{pmatrix} \end{align*}which can be done numerically with pretty high precision (see e.g. Runge-Kutta Methods). However computing these numerically introduces some "noise" to the process and forces us to keep a decision rule as before (see e.g. Vishnoi, 2021).

- HMC has the flaw of needing us to set the step size of the ODE-integrator and the number of steps which should be propagated before a new sample is fixed. Especially with too big step sizes (but also with to long integration) the dynamics tend to move back and forth through the parameter-space and end up ending close to the start point. This is called the U-Turn Problem, which can be further addressed (e.g. see Hoffman and Gelman, 2011).

| Model | Computation Technique |

| Directed Graphical Models (representable by a DAG) | Monte Carlo Sampling and extensions |

The Gaussian Distribution

- Univariate Gaussian Distribution: Let $X$ be gaussian distributed with mean $\mu$ and Variance

$\sigma^2$, i.e. $X\sim \mathcal N (\mu, \sigma^2)$ then its PDF is given by:

\begin{align*} \mathcal N (x; \mu, \sigma^2)=\frac{1}{\sigma\sqrt{2\pi}} \exp \left(-\frac{(x-\mu)^2}{2\sigma^2}\right) \, . \end{align*}

- Closeness under multiplication: The product of two gaussian PDFs is still a gaussian PDF, therefore it is convenient to set up a model assuming $X\sim \mathcal N (\mu, \sigma^2)$ and $Y\vert X\sim \mathcal N(x,\nu^2)$ because then it follows:

\begin{align*} X \vert Y \sim \mathcal N(m, s^2) \qquad \textsf{with} \quad s^2 = \frac{1}{\sigma^{-2}+\nu^{-2}}, \quad m = \frac{\sigma^{-2}\mu + \nu^{-2}y}{\sigma^{-2}+\nu^{-2}}\, , \end{align*}which can easily be extended to $Y$ consisting of iid samples $Y_1, ..., Y_n$. For parameter estimation it is then useful to rewrite the above terms to $s^{-2}$ and $s^{-2}m$ because then estimation can be done via summation of the so called sufficient statistics of this problem, namely $\sum_i \nu_i^{-2}$ and $\sum_i \nu_i^{-2}y_i$.

- Multivariate Gaussian Distribution: Let $X$ be gaussian distributed with mean $\mu \in \mathbb R^{n}$ and Covariance-Matrix $\Sigma\in \mathbb R^{n\times n}$ which is symmetric and positive definite (therefore invertible), i.e. $X\sim \mathcal N (\mu, \Sigma)$ then its PDF is given by:

\begin{align*} \mathcal N(x; \mu, \Sigma)=\frac{1}{(2\pi)^{n/2}\vert \Sigma \vert^{1/2}} \exp \left(-\frac{1}{2} (x-\mu)^T\Sigma^{-1}(x-\mu) \right) \, . \end{align*}

- Algebraic Trick: The Gaussian PDF obeys the following algebraic rule, which can be useful when solving Integrals to leverage the normalization condition:

\begin{align*} \mathcal N(a; b, C) = \mathcal N(b; a, C)\, . \end{align*}

- Product of two Gaussian distributions: One gets:

\begin{align} \mathcal N(x; a, A) \cdot \mathcal N(x; b, B) = \mathcal N(x; c, C)\cdot Z \quad \textsf{with} \quad C=(A^{-1}+B^{-1})^{-1} \, ,\ \ c = C(A^{-1}a+B^{-1}b)\, , \ \ Z=\mathcal N(a; b, A+B) \, . \label{eq:gauss_prod} \end{align}This leads to an analogous implication as stated corresponding to "closeness" above. Combining this (exactly: that $Z$ is normalized) with the algebraic trick from above this also yields:\begin{align*} \int \mathcal N(y; Qx, \Sigma) \cdot \mathcal N(x; \mu, \Lambda) \, \mathrm dx = \mathcal N (y; Q\mu, Q\Lambda Q^T + \Sigma)\, . \end{align*}

- Affine Transformations of Gaussians: Transform a RV $X$ by $AX+b$ where $A$ is a matrix of necessary size, one gets:

\begin{align*} AX+b \sim \mathcal N (A\mu+b, A\Sigma A^T) \, . \end{align*}

- The Central Limit Theorem states that for a lot of distributions $D(\theta)$ (which have to obey certain criterions) the sum of iid $X_i \sim D(\theta)$ is normally distributed. This is the reason why in nature many observables are indeed gaussian distributed when measured.

- Maximum Entropy: Of all probability distributions over $\mathbb R$ with a specified mean $\mu$ and variance $\sigma^2$, the normal distribution $\mathcal N(\mu, \sigma^2)$ is the one with maximum entropy $H=\int p(x) \log p(x) \, \mathrm dx$. Given some knowledge of variational calculus the proof for this property is surprisingly straightforward (see it incomplete on Wikipedia).

- Besides the two reasons above, the Gaussian Distribution is also widely used because of its nice properties according conditionals and marginals.

- Matrix Inversion and Determinant Lemma: When working with multivariate gaussian distributions two very useful results from Linear Algebra are:

\begin{align*} \big(Z+UWV^T \big)^{-1} &= Z^{-1} - Z^{-1} U \big(W^{-1}+V^TZ^{-1}U\big)^{-1}V^TZ^{-1} \\ \big\vert Z+UWV^T \big\vert &= \vert Z\vert \cdot \vert W\vert \cdot \big\vert W^{-1} + V^T Z^{-1}U\big\vert\, . \end{align*}

- Block Matrix Inversion: For inverting block covariance matrices also the following property from Linear Algebra is useful. If $P$ and $M$ (see below) are invertible then:

\begin{align*} A = \begin{pmatrix} P & Q \\ R & S \end{pmatrix} \, , \quad M:= \big(S-RP^{-1}Q\big)^{-1} \qquad \Rightarrow \qquad A^{-1} = \begin{pmatrix} P^{-1}+P^{-1} Q M R P^{-1} & -P^{-1}QM \\ -M R P^{-1} & M \end{pmatrix} \end{align*}There exist different forms if instead of $P$ one of the other three blocks is invertible but they can be transformed into each other by using equations of the form\begin{align*} \begin{pmatrix} 0 & \boldsymbol 1 \\ \boldsymbol 1 & 0 \end{pmatrix} \cdot \begin{pmatrix} S & R \\ Q & P \end{pmatrix} \cdot \begin{pmatrix} 0 & \boldsymbol 1 \\ \boldsymbol 1 & 0 \end{pmatrix} = \begin{pmatrix} P & Q \\ R & S \end{pmatrix} \, . \end{align*}To calculate the inverse then calculate the inverse of the left side and use $(ABC)^{-1} = C^{-1}B^{-1}A^{-1}$ with.\begin{align*} \begin{pmatrix} 0 & \boldsymbol 1 \\ \boldsymbol 1 & 0 \end{pmatrix}^{-1} = \begin{pmatrix} 0 & \boldsymbol 1 \\ \boldsymbol 1 & 0 \end{pmatrix} \, . \end{align*}

- The last two properties can be used to derive the following "theorems" (although they are not formulated as proper theorems here 😉).

- Marginals of a Gaussian: The marginal of a gaussian distribution is again a gaussian distribution, i.e. (full derivation):

\begin{align*} Z = \begin{pmatrix} X_1 \\ X_2 \end{pmatrix} \sim \mathcal{N} \left[ \begin{pmatrix} \mu_1 \\ \mu_2 \end{pmatrix} , \begin{pmatrix} \Sigma_{11} & \Sigma_{12} \\ \Sigma_{21} & \Sigma_{22} \end{pmatrix} \right] \qquad \Rightarrow \qquad X_1 \sim \mathcal N(\mu_1, \Sigma_{11}) \end{align*}

- Conditionals of a Gaussian: The conditional of a gaussian distribution is again a gaussian distribution, i.e. (full derivation):

\begin{align}\begin{split} Z = \begin{pmatrix} X_1 \\ X_2 \end{pmatrix} \sim \mathcal{N} \left[ \begin{pmatrix} \mu_1 \\ \mu_2 \end{pmatrix} , \begin{pmatrix} \Sigma_{11} & \Sigma_{12} \\ \Sigma_{21} & \Sigma_{22} \end{pmatrix} \right] \qquad \Rightarrow \qquad X_1\vert X_2=a \sim \mathcal N \Big( \underbrace{\mu_1 + \Sigma_{12}\Sigma_{22}^{-1}(a-\mu_2)}_{\mu_{1\vert 2}}, \ \ \underbrace{\Sigma_{11}-\Sigma_{12}\Sigma_{22}^{-1} \Sigma_{21}}_{\Sigma_{1\vert 2}} \Big) \end{split} \label{eq:conditionals_gaussian} \end{align}with a special case being $X_2 = AX_1$:\begin{align*} X\vert AX=a \sim \mathcal N\Big( \mu + \Sigma A^T (A\Sigma A^T)^{-1}(a-A\mu), \ \ \Sigma - \Sigma A^T (A\Sigma A^T)^{-1}A\Sigma \Big) \end{align*}

- Bayesian Inference with Gaussians:

- Using the two rules above one can summarize: If $X \sim \mathcal N(\mu, \Sigma)$ and $Y\vert X=x \sim

\mathcal N(Ax+b, \Lambda)$, then

\begin{align}\begin{split} Y &\sim \mathcal N\Big(A\mu + b, \ \ \Lambda + A\Sigma A^T\Big) \qquad \textsf{and} \\ X\vert Y=y &\sim \mathcal N \Big( \mu + \underbrace{\Sigma A^T \big( A\Sigma A^T + \Lambda^{-1}\big)^{-1}}_{\textsf{gain}} \underbrace{\big(y - (A\mu +b)\big)}_{\textsf{residual}} , \ \ \Sigma - \Sigma A^T \big(\underbrace{A\Sigma A^T + \Lambda}_{\textsf{Gram matrix}}\big)^{-1} A\Sigma \Big) \\ &= \mathcal N \Big( \big(\underbrace{\Sigma^{-1} + A^T\Lambda^{-1}A}_{\textsf{precision matrix}}\big)^{-1} \big( A^T\Lambda^{-1}(y-b)+\Sigma^{-1}\mu \big) , \ \ \big(\underbrace{\Sigma^{-1} + A^T\Lambda^{-1}A}_{\textsf{precision matrix}}\big)^{-1} \Big) \, . \end{split} \label{eq:bayes_gaussian} \end{align}

- This maps the complicated task of probabilistic inference with the calculations of integrals (exponentially hard in the number of parameters) to a linear algebra problem (at most cubic complexity in the number of parameters). Both formulations of $X \vert Y=y$ are useful, depending of the sizes of $X$ and $Y$ to save computational costs.

- A special case of this is $X\sim \mathcal N(\mu, \Sigma)$ and $Y\vert X \sim \mathcal N (A^Tx + b,

\Lambda)$ with

\begin{align*} B^TX +c \vert Y=y \sim \mathcal N \Big( B^T\mu + c + B^T \Sigma A \big( A^T \Sigma A + \Lambda \big)^{-1}(y-A^T\mu-b), \ \ B^T\Sigma B - B^T \Sigma A \big(A^T \Sigma A + \Lambda \big)^{-1} A^T \Sigma B \Big) \, . \end{align*}

- Using the two rules above one can summarize: If $X \sim \mathcal N(\mu, \Sigma)$ and $Y\vert X=x \sim

\mathcal N(Ax+b, \Lambda)$, then

- In Lecture 6 Prof. Hennig provides practical examples (including nice tricks) on how to use this machinery.

- Reading Marginal and Conditional Independence from Gaussians: Given a multivariate RV

$X=(X_1,...,X_n)$ with $X\sim \mathcal N (\mu, \Sigma)$. Let $i,k \in \{1,..., n\}, \ i\neq k$ and let

$X_{\setminus i}$ be the RV containing all $X_j$ except $X_i$. Then:

- The marginal distributions of $X_i$ and $X_k$ are independent if $\Sigma_{ik}=\Sigma_{ki}=0$.

- The conditional distributions of $X_i, X_k\vert X_{\setminus i, k}$ are independent if $(\Sigma^{-1})_{ik}=(\Sigma^{-1})_{ki}=0$.

Gaussian Parametric Regression

- Supervised Regression:

- Given is a dataset

\begin{align*} \left\{(x_i, y_i)\, \vert \, x_i \in \mathbb R^{d-1}, \ y_i \in \mathbb R^F, \ i=1,\dots ,N\right\} \, , \end{align*}with $d, F\in \mathbb N$, for which the following model is proposed:\begin{align*} Y\vert f \sim \mathcal N (f(X), \sigma^2\boldsymbol 1) \, . \end{align*}Let $X$ denote the complete dataset of $x_i$'s and analogous for $Y$ and $y_i$'s. For $d=2, \ F=1$ this reduces to good ol' Linear Regression.

- Assume that $d$ is a linear function, i.e.

\begin{align*} f(x_i)=f_{x_i}=w_0 + x_i^TW_1, \quad \textsf{with} \quad w_0 \in \mathbb R^F \ \ \textsf{and} \ \ W_1 \in \mathbb R^{(d-1) \times F} \end{align*}and use the notation\begin{align*} \phi(X) = \begin{pmatrix} 1 & 1 & \dots & 1 \\ x_{11} & x_{21} & \dots & x_{N, 1} \\ \vdots & \vdots & \ddots & \vdots \\ x_{1, d-1} & x_{2, d-1} & \dots & x_{N, d-1} \end{pmatrix} \in \mathbb R^{d\times N} \, , \qquad W = \begin{pmatrix} w_0^T \\ W_1 \end{pmatrix} \in \mathbb R^{d \times F} \end{align*}then\begin{align*} f_X = \phi_X^TW \in \mathbb R^{N\times F} \end{align*}contains the "somewhat prediction" for each $y_i$ row-wise.

- Choose a gaussian prior for $W$, i.e. $\tilde W\sim \mathcal N(\mu, \Sigma)$, where $\tilde W$ is an unrolled version of $W$ with all elements of $\tilde W$ in an $d\cdot F$-dimensional vector and $\Sigma \in \mathbb R^{d\cdot F\times d\cdot F}$. Going on from here without restricting the dimensions or some assumption about independence of certain dimensions of $Y$ gets verbose because therefore we would need to construct a matrix distribution for $W$, which can be done using the Matrix Normal Distribution though. Nevertheless let from now be $\boldsymbol{F=1}$ which yields $\Sigma \in \mathbb R^{d \times d}$ and $f_X\in \mathbb R^N$ and therefore $f_X \sim \mathcal N (\phi_X^T\mu, \ \phi_X^T \Sigma \phi_X)$.

- For inference of $f$ all that is left is to calculate the posterior of $W$ using what is given:

\begin{align*} P(W\vert Y, \phi_X) \propto P(Y\vert W, \phi_X) \cdot P(W) \, . \end{align*}We use the second formulation of \eqref{eq:bayes_gaussian} because then only $d \times d$ matrices need to be inverted whereas in the other formulation $N\times N$ matrices would need to be inverted:\begin{align*} \Rightarrow \quad W\vert Y=y, \phi_X \sim \mathcal N \Big( \big(\Sigma^{-1}+\sigma^{-2}\phi_X\phi_X^T\big)^{-1}\big( \Sigma^{-1}\mu + \sigma^{-2}\phi_X y\big), \ \ \big(\Sigma^{-1}+\sigma^{-2}\phi_X\phi_X^T\big)^{-1} \Big) \, , \end{align*}

- The posterior for $W$ can be easily translated to a posterior in $f$ by applying $\phi_x$, where $x$ can be any data-instance that is to be evaluated.

\begin{align*} f_x \vert Y=y, \phi_X \sim \mathcal N \big( \phi_x^T \mu_{W\vert Y=y; \phi_X}, \ \phi_x^T \Sigma_{W\vert Y=y; \phi_X} \phi_x\big) \end{align*}where mean and covariance matrix from above are used.

- Given is a dataset

- Solving Linear System of Equations instead of Inverting:

- In practice it is typically not a good idea to try to invert matrices directly when building an algorithm because often the actual numerically evaluated matrices are not invertible (e.g. because of rounding and numerical instabilities).

- What is therefore done instead is to rewrite the problem: Let $A$ be an (invertible) matrix and $X$ and $Y$ be matrices of suitable size. If one has to compute $X=A^{-1}Y$ in a computation this can be done without directly computing $A^{-1}$ by solving the linear system of Equations $AX=Y$ for $X$.

- If instead $X=YA^{-1}$ needs to be computed, solve $A^TX^T=Y^T$ for $X^T$ and transpose the result yet again.

- If $A$ is symmetric positive definite, it is useful to use the Cholesky Decomposition $LL^T=A$, where $L$ is a (upper) triangular matrix. Then first solve $LZ=Y$ for $Z$ and then $L^TX=Z$ for $X$. For example in scipy this can be done in one step using cho_solve. This is especially efficient if the matrix $A$ needs to be used in this fashion multiple times, because then precomputing the Cholesky decomposition saves computational costs.

- Computing the Cholesky decomposition can be done pretty straightforward with e.g. the Banachiewicz and Cholesky Algorithm with the complexity of $\mathcal{O}(n^3)$ where $A\in \mathbb R^{n\times n}$.

- Polynomial Regression: Suppose $f=2 \ \Rightarrow x_i \in \mathbb R$. For polynomial regression replace $\phi_x = (1, \, x)^T$ with:

\begin{align*} \phi_x = (1,\, x,\, x^2,\,...,\,x^d), \quad d \in \mathbb N \, . \end{align*}

- "Underestimation" of Uncertainty: In the way we used Bayesian gaussian regression so far, the variance of the posterior $W\vert Y=y; \phi_X$ or $f_x\vert Y=y; \phi_X$ does not depend on the observed data $y$. This leads to an overconfident prediction of the "area" in which $f(x)$ should be for "new" observations $x$.

- Generalized Regression: Nothing in the Bayesian Regression framework hinders us from choosing arbitrary functions in $\phi_X$, which is pretty cool. Choosing e.g. $\phi_x=(\cos x,\, \sin x,\, \cos(2x), \, \sin (2x),\,...)^T$ or step-functions (using the Heaviside Step Function) is also valid, and even only requires minimal modifications in a program which does the job!

- Multivariate Outputs: Above we restricted the general discussion to $y\in \mathbb R$ when we noticed wo would have to use the somewhat unhandy Matrix normal distribution to go on if we want higher dimensional outputs. Otherwise one can also do gaussian regression for each output feature separately to cover multivariate output.

- Two Sides of the Coin: Being able to choose the prior function-space with little restrictions makes gaussian regression in this fashion very flexible. On the other side we get the difficulty to be forced to do at least some choice of feature space which might be not optimal in the end.

| Model | Computation Technique |

| Directed Graphical Models (representable by a DAG) | Monte Carlo Sampling and extensions |

| Gaussian Distributions | Gaussian Inference by Linear Algebra |

Learning Representations

- Feature Selection: There is an infinite-dimensional space of feature functions to choose from. One approach is to restrict oneself to a finite-dimensional sub-space and search in there, e.g.

\begin{align*} \phi_i(x; \theta) = \frac{1}{1 + \exp \left(-\frac{x-\theta_1}{\theta_2}\right)} \end{align*}

- Hierarchical Bayesian Inference: The generalization of the approach above is to restrict the feature space to some function $\phi_i(x; \theta)$ and make $\theta$ part of the inference, i.e. assume a prior for them and infer them via Bayesian inference. Note that if the features are do not depend linearly on $\theta$ - which would not be a good choice anyway because linear dependence can be absorbed in the $W$'s from before - it is not possible to leverage the properties of gaussian distributions again to reduce the inference to linear algebra operations. This is because all the machinery from above was derived from the affine transformation relation of gaussian distributed RVs and here $\phi(x; \theta)$ is not affine w.r.t. $\theta$.

- Maximum Likelihood Estimation (ML / MLE): Because inference then might be intractable in many cases one therefore instead can reduce oneself to not do a full inference including a prior and a posterior for

$\theta$ but instead for some given data $(x, \, y)$ maximize the likelihood of observing the data $y$ given

$x$ and the parameters $\theta$ in $\theta$:

\begin{align*} \hat \theta = \underset{\theta}{\mathrm{argmax}} \big( p(y \vert x, \theta) \big) = \underset{\theta}{\mathrm{argmax}} \int p(y\vert f, x, \theta) p(f \vert x, \theta)\, \mathrm df \end{align*}

where integration over $f$ can be transferred to an integration over $W$ as before.

- For the gaussian setting from the closeness of the gaussian PDF under multiplication one can conclude:

\begin{align*} \underbrace{\mathcal N(y; {\phi_X^\theta}^T W, \Lambda)}_{P(y\vert f, x, \theta)} \cdot \underbrace{\mathcal N(f; {\phi_X^\theta}^T\mu, \Sigma)}_{P(f\vert x, \theta)} = \underbrace{\mathcal N(f; \tilde m_{\textsf{post}}^\theta, \tilde V_{\textsf{post}}^\theta)}_{P(f\vert y, x,\theta)} \cdot \mathcal N(y; {\phi_X^\theta}^T\mu, \ {\phi_X^\theta}^T \Sigma \phi_X^\theta + \Lambda) \end{align*}Where the equality can be shown by using $\mathcal N(x; y, C) = \mathcal N(y; x, C)$. Applying Bayes theorem in reverse to this formula lets us identify:\begin{align*} Y\vert \theta, X \sim \mathcal N ({\phi_X^\theta}^T\mu, \ {\phi_X^\theta}^T \Sigma \phi_X^\theta + \Lambda) \end{align*}

- This leads to the MLE-problem:

\begin{align*} \hat \theta &= \underset{\theta}{\mathrm{argmax}} \, \mathcal N(y; {\phi_X^\theta}^T\mu, \ {\phi_X^\theta}^T \Sigma \phi_X^\theta + \Lambda) \\ & = \underset{\theta}{\mathrm{argmin}} \, \frac{1}{2} \left( \underbrace{ \Big( y-{\phi_X^\theta}^T\mu \Big)^T \Big( {\phi_X^\theta}^T \Sigma {\phi_X^\theta} + \Lambda \Big)^{-1} \Big( y-{\phi_X^\theta}^T\mu \Big) }_{\textsf{square error}} + \underbrace{ \log \Big\vert {\phi_X^\theta}^T \Sigma {\phi_X^\theta} + \Lambda \Big\vert }_{\textsf{model complexity / Occam factor}} \right) \end{align*}where the following common trick is used:\begin{align*} \underset{\theta}{\mathrm{argmax}}\, f(\theta) = \underset{\theta}{\mathrm{argmin}}\, \Big( - \log f(\theta) \Big) \, . \end{align*}

- The model complexity / Occam factor acts as a regularization term.

- Maximum Aposteriori Estimation (MAP): When one wants to include prior information about $\theta$ in the model, one does so by assuming some prior distribution. $\hat \theta$ is then estimated as the mode of the

posterior distribution $p(\theta\vert y, x)$:

\begin{align*} \hat \theta = \underset{\theta}{\mathrm{argmax}} \, \Big( p(\theta \vert y, x)\Big) = \underset{\theta}{\mathrm{argmax}} \, \Big( p(y\vert x, \theta)p(\theta)\Big) = \underset{\theta}{\mathrm{argmin}} \, \Big( - \underbrace{\log p(y\vert x, \theta)}_{\textsf{Log-Likelihood}} - \underbrace{\log p(\theta)}_{\textsf{Log-Prior}} \Big) \, . \end{align*}where typically the Log-Prior takes the role of a regularization. The first equality holds because the marginal $p(y\vert x) = \int p(y \vert \theta, x) p(\theta) \, \mathrm d\theta$ does not depend on $\theta$.

- A linear Gaussian regressor is actually equivalent to a single (hidden) layer neural network with quadratic output loss, and fixed input layer.

- The optimization Process can be done by building a computation graph for all the computations necessary to compute the Log-Likelihood - or more general the target function - and using automatic differentiation (autodiff) which is basically the chain rule applied to the graph by a computer. A more detailed description of autodiff and especially reverse mode autodiff (which is very similar to backpropagation) can be found in this Lecture-Part.

- Connection to Deep Learning: In the single layer picture the Bayesian linear regression setting is similar to the optimization problem for a regression neural network when using MAP. Given that the samples

$y_i$ are drawn iid it is (omitting the $x$'s)

\begin{align*} p(y \vert W, \phi^\theta) = \prod p(y_i\vert W, \phi^{\theta}_i) = \prod \mathcal N(y_i; {\phi^{\theta}}^TW, \sigma^2)\, . \end{align*}Optimizing wrt. $W$ and $\theta$ yields:\begin{align*} \underset{\theta, W}{\mathrm{argmax}} \, P(W, \theta \vert y) &= \underset{\theta, W}{\mathrm{argmin}} \Big(- \log P(W, \theta \vert y) \Big) \\ &= \underset{\theta, W}{\mathrm{argmin}} \Big(- \log P(y\vert W, \theta)\cdot P(W, \theta) \Big)\\ &= \underset{\theta, W}{\mathrm{argmin}} \left(- \log P(W, \theta) + \frac{1}{2\sigma^2} \sum \big\vert\big\vert y_i - {\phi^{\theta}}^TW \big\vert\big\vert^2 \right)\, , \end{align*}which resembles minimizing the mean squared error ans some regularization. Note that for e. g. $\theta_i \overset{\mathrm{iid}}{\sim} \mathcal N (0, 1)$ it would be $-\log P(\theta) = \sum \theta_i^2$ which leads to L2-regularization.

| Model | Computation Technique |

| Directed Graphical Models (representable by a DAG) | Monte Carlo Sampling and extensions |

| Gaussian Distributions | Gaussian Inference by Linear Algebra |

| (Deep) Learnt Representations | Maximum Likelihood / Maximum Aposteriori |

Gaussian Processes

- In the last part we explicitly chose feature by defining feature functions which depended on parameters $\theta$ which were determined via ML or MAP estimation. In this part we will use another approach by increasing the number of features.

- We shorten the notation by introducing the mean function and the covariance function a. k. a.

kernel:

\begin{align*} m_x: \mathbb X \to \mathbb R ,\ x \mapsto \phi_x^T \mu \, , \qquad k_{ab}: \mathbb X \times \mathbb X \to \mathbb R , \ (a, b) \mapsto \phi_a^T \Sigma \phi_b \end{align*}which yields (compare "Gaussian Linear Regression"):\begin{align*} f_x \vert Y=y, \phi_X \sim \mathcal N \Big( m_x + k_{xX} \left(k_{XX} + \sigma^2 \boldsymbol 1\right)^{-1}(y-m_X), \ k_{xx} - k_{xX} \left(k_{XX}+\sigma^2 \boldsymbol 1\right)^{-1}k_{Xx} \Big) \, . \end{align*}

- For two input points $x_i$ and $x_j$ the kernel has the structure of a sum (assuming that $\Sigma$ is diagonal). For specific feature choices one can increase the number of features to infinity by transferring the sum to an integral. E. g. consider the (scaled) covariance matrix

- Typically one then sets $\mu=0$ (assuming one has subtracted the mean from the data, which can be done easily) and redefines $m_x=0$ and $k_{ab}$ to the kernel chosen. For gaussian (bell-shaped) feature function solving the integral above for $c_{\mathrm{max}} \to \infty$ and $c_{\mathrm{min}} \to -\infty$ this takes the form (also called the RBF kernel):

\begin{align*} k_{x_ix_j} = \sqrt{2\pi}\lambda \sigma^2 \exp \left( - \frac{(x_i-x_j)^2}{4\lambda^2}\right)\, . \end{align*}

- This procedure can be abstracted to the formal definition of (Mercer) Kernels which can then be used to define Gaussian Processes:

- (Mercer / positive definite) Kernel $k : \mathbb X \times \mathbb X \to \mathbb R$ is a (Mercer / positive definite) kernel if, for any finite collection $X=[x_1,..., x_N]$, the matrix $k_{XX} \in \mathbb R^{N\times N}$ with $[k_{XX}]_{ij} = k(x_i, x_j)$ is positive semidefinite.

- Every kernel which is constructed as in the example above is symmetric and can easily shown to be a Mercer kernel:

\begin{align*} v^Tk_{XX} v = v^T \left[\sum_l \phi_l(x_i) \phi_l(x_j) \right]_{ij} v = \sum_l \sum_{i=1}^N v_i\phi_l(x_i) \sum_{i=1}^N \phi_l(x_j) v_j = \sum_l \left( \sum_{i=1}^N v_i\phi_l(x_i) \right)^2 \geq 0\, . \end{align*}

- Gaussian Process: Let $\mu: \mathbb X \to \mathbb R$ be any function and $k:\mathbb X \times \mathbb X \to \mathbb R$ be a Mercer kernel. A Gaussian Process $p(f) = \mathcal G \mathcal P(f; \mu , k)$ is a probability distribution over the function $f:\mathbb X \to \mathbb R$, such that every finite restriction to function values $f_X:=[f_{X_1},..., f_{X_N}]$ is a Gaussian distribution $p(f_X) = \mathcal N (f_X; \mu_X, k_{XX})$.

- When choosing normalization constants for the kernel (or choosing a bound kernel directly) each feature contributes only an infinitely small amount to the overall posterior.

- There exist several kernels which for typical feature choices such as the RBF kernel for gaussian features, and the cubic spline kernel for ReLU features.

- A flaw of Gaussian process Models is, that for the reformulation of $p(f)$ to the kernel-notation, we needed to use the formulation of gaussian inference which lead to $N \times N$ dimensional matrices which need to be inverted. There exist some approximations to speed up modelling though.

- The discussed formalism gives rise to the question of how large the space of possible kernels / Gaussian Process Models generally is. On can show the if $\phi: \mathbb Y \to \mathbb X$ and $k_1,k_2 :\mathbb X \times \mathbb X \to \mathbb R$ are Mercer kernels, then

- $\alpha \cdot k_1(a,b)$ for $\alpha \in \mathbb R_+$

- $k_1 \big( \phi(c), \phi(d) \big)$ for $c, d \in \mathbb Y$

- $k_1(a,b) + k_2(a,b)$

- $k_1(a,b) \cdot k_2(a,b)$ (also known as Schur Product Theorem)

- Note that also parameters of kernels can be learned analogous as in "Learning Representations", e. g. by using ML or MAP estimation.

\begin{align*}

\Sigma = \frac{\sigma^2 (c_{\mathrm{max}}-c_{\mathrm{min}})}{F}\boldsymbol 1 \qquad \textsf{and} \qquad

\phi_l(x) = \exp \left( - \frac{(x-c_l)^2}{2\lambda^2} \right)\, , \ \ l =1,\dots F\, .

\end{align*}

This is placing $F$ bell-shaped features in a range $c_{\mathrm{min}}$ to $c_{\mathrm{max}}$ on the real axis. This yields:

\begin{align*}

\phi(x_i)^T\Sigma \phi(x_j) &= \frac{\sigma^2 (c_{\mathrm{max}}-c_{\mathrm{min}})}{F} \sum_{l=1}^F \exp \left( - \frac{(x_i-c_l)^2}{2\lambda^2} \right) \exp \left( - \frac{(x_j-c_l)^2}{2\lambda^2} \right) = \dots \\

&= \frac{\sigma^2 (c_{\mathrm{max}}-c_{\mathrm{min}})}{F} \exp \left( - \frac{(x_i-x_j)^2}{4\lambda^2} \right)

\sum_{l=1}^F \exp \left( - \frac{\left(c_l - \frac{1}{2}(x_i+x_j)\right)^2}{\lambda^2} \right) \, .

\end{align*}

When one not increasing $F$ and decreasing the distance between to $c$'s such that $F\cdot \delta c / (c_{\mathrm{max}}-c_{\mathrm{min}})$ remains constant this can be transferred to the integral:

\begin{align*}

\phi(x_i)^T\Sigma \phi(x_j) = \sigma^2 \exp \left(-\frac{(x_i-x_j)^2}{4\lambda^2}\right)

\int_{c_{\mathrm{min}}}^{c_{\mathrm{max}}}\exp \left( - \frac{\left(c_l -

\frac{1}{2}(x_i+x_j)\right)^2}{\lambda^2} \right) \, \mathrm dc\, .

\end{align*}

| Model | Computation Technique |

| Directed Graphical Models (representable by a DAG) | Monte Carlo Sampling and extensions |

| Gaussian Distributions | Gaussian Inference by Linear Algebra |

| (Deep) Learnt Representations | Maximum Likelihood / Maximum Aposteriori |

| Kernels |

Understanding Kernels

- Eigenvalues / Eigenvectors and Spectral Theorem: Let $A$ be a matrix. A scalar $\lambda \in \mathbb C$ and a vector $v \in \mathbb C^n$ are called eigenvalue and corresponding eigenvector if $Av = \lambda v$. Ihe eigenvectors of symmetric matrices $A = A^T$ are in $\mathbb R^n$ and form the basis of the image of $A$ (where $\mathrm{Im} (A) = \{ Ax \vert x \in \mathbb R^n \}$).

- Spectral Theorem: A symmetric positive definite matrix $A$ has positive eigenvalues $\lambda_a >0 \

\forall \ a = 1,...,n$ and can be written a a Gramian (outer product) of the eigenvectors:

\begin{align*} [A]_{ij} = \sum_{a=1}^n \lambda a [v_a]_i [v_a]_j\, . \end{align*}

- Let $V = (v_1,..., v_n)$ and $\Lambda = \mathrm{diag} (\lambda_1,...,\lambda_n)$, then the following properties hold

\begin{align*} A = V\Lambda V^{-1} \, ,\qquad \Lambda = V^{-1} A V \, , \qquad A^{-1} = V^{-1}\Lambda^{-1}V \, . \end{align*}

- Every function which can be written as a power series $f(A) = \sum \alpha_k A^k$ can then be applied to

$A$ as follows:

\begin{align*} f(A) = V f(\Lambda) V^{-1} \, . \end{align*}

- With $\mathrm{det} (AB) = \mathrm{det}(A)\mathrm{det}(B)$ and $\mathrm{tr}(ABC) = \mathrm{tr}(CAB) =

\mathrm{tr}(BCA)$ it is easy to show:

\begin{align*} \mathrm{det}(A) = \prod_{i=1}^n \lambda_i \, , \qquad \mathrm{tr}(A) = \sum_{i=1}^n \lambda_i \, . \end{align*}

- Eigenfunctions on Measure Spaces: Let $(\Omega, \nu)$ be a measure space and $f: \Omega \times \Omega

\to \mathbb R$. A function $\phi: \Omega \to \mathbb R$ and a scalar $\lambda \in \mathbb C$ which obey

\begin{align*} \int f(x, y) \phi(y) \, \mathrm d\nu(y) = \lambda \phi(x) \end{align*}

are called eigenfunction and eigenvalue of $f$ wrt. $\nu$.

- Mercers Theorem / Spectral Theorem of Mercer Kernels: Let $(\mathbb X, \nu)$ be a finite measure space and $k:\mathbb X\times \mathbb X \to \mathbb R$ a continuous (Mercer) kernel. Then there exist eigenvalues/functions $(\lambda_i, \phi_i)_{i\in I}$ wrt. $\nu$ such that $I$ is countable,, all

$\lambda_i$ are real and non-negative, the eigenfunction can be made orthonormal, and the following series converges absolutely and uniformly $\nu^2$-almost-everywhere:

\begin{align*} k(a, b) = \sum_{i\in I} \lambda_i \phi_i(a)\phi_i(b)\quad \forall \quad a, b \in \mathbb X \end{align*}

- It is not coincidence that this resembles very much the spectral theorem for self-adjoint operators in quantum mechanics. All this can be seen as the generalization from countable matrices to uncountable operators.

- Stationary Kernels: A kernel $k(a,b)$ is called stationary if it can be written as $k(a,b)=k(\tau)$ with $\tau = a-b$.

- Borchner's Theorem: A complex-valued function $k$ on $\mathbb R^d$ is the covariance function of a weakly stationary mean square continuous complex-valued random process on $\mathbb R^d$ if, and only if, its Fourier transform is a probability (i. e. finite positive) measure $\mu$:

\begin{align*} k(\tau) = \int_{\mathbb R^d} e^{2\pi \mathrm i s^T \tau} \, \mathrm d \mu(s) = \int_{\mathbb R^d} \left(e^{2\pi \mathrm i s^T a} \right) \left(e^{2\pi \mathrm i s^T b} \right)^*\, \mathrm d \mu(s)\, . \end{align*}

- Connection to Least-Squares Estimate: The least-squares estimate of a function $f$ given data $X$ can be seen as the point estimation of a gaussian posterior. To show this, one need the posterior PDF $p(p_X\vert Y=y)$ and to simplify the calculations, the easiest approach is not to use the full $m_X, k_{ab}$-rewritten expression from above, but instead to take one step back. The whole gaussian regression (and thus also gaussian process) framework is based on the assumptions $Y\vert f_X \sim \mathcal N(f_X, \sigma^2\boldsymbol 1)$ and $W\sim \mathcal N (\mu, \Sigma)$ which is equivalent to $f_X \sim \mathcal N(\phi_X^T \mu, \phi_X^T

\Sigma \phi_X) = \mathcal N(m_X, k_{XX})$. Therefore we have

\begin{align*} p(f_X\vert Y=y) = \frac{p(Y=y\vert f_X) \cdot p(f_X)}{p(Y=y)} \propto \mathcal N(y; f_X, \sigma^2 \boldsymbol 1) \cdot \mathcal N(f_X; m_X, k_{XX})\, , \end{align*}where the denominator is independent of $f_X$. Using this it is fairly easy to derive:\begin{align*} \mathbb E_{p(f_X\vert Y = y)} [f_X] = \underset{f_X}{\mathrm{argmax}} \, p(f_X \vert y) = \underset{f_X}{\mathrm{argmin}} \left( \frac{1}{2\sigma^2} \vert \vert y-f_X\vert \vert^2 + \frac{1}{2} \vert \vert f_X - m_X \vert \vert^2_k \right)\, , \end{align*}where $\vert \vert \xi \vert \vert_k^2 = \xi^T k^{-1}_{XX}\xi$. The first equality holds, because $f_X\vert Y=y$ is normally distributed such that the expectation is equal to the mode.

- Reproducing kernel Hilbert Space (RKHS): Let $\mathcal H = (\mathbb X, \langle \cdot, \cdot \rangle )$ be a Hilbert space of function $f: \mathbb X \to \mathbb R$. Then $\mathcal H$ is called a reproducing kernel Hilbert space if there exists a kernel $k: \mathbb X \times \mathbb X \to \mathbb R$ such that

- for all $x\in \mathbb X: \ \ k(\cdot, x)\in \mathcal H$.

- for all $f \in \mathcal H: \ \ \langle f(\cdot), k(\cdot, x)\rangle_\mathcal H = f(x)$.

\begin{align*} \mathcal H_k = \left\{ f(x) := \sum_{i\in I}\tilde \alpha_i k(x_i, x) \, \Big \vert\, \tilde \alpha_i \in \mathbb R \ \forall \ i \in I \right\} \qquad \textsf{with} \qquad \langle f, g\rangle_{\mathcal H_k} := \sum_{i\in I} \frac{\tilde \alpha_i \tilde \beta_i}{k(x_i, x_i)}\, . \end{align*} - That being said, for a Gaussian Process with $p(f)=\mathcal G\mathcal P(0,k)$ and likelihood $p(y\vert f, X)=\mathcal N(y;f_X, \sigma^2\boldsymbol 1)$ the RKHS os the space of all possible posterior mean function:

\begin{align*} \mu(X) = k_{xX} \underbrace{\left( k_{XX} +\sigma^2\boldsymbol 1 \right)^{-1}y}_{:=w} = \sum_{i=1}^n w_ik(x,x_i)\quad \textsf{with} \quad x_i \in X\, . \end{align*}

Therefore the RKHS can be viewed as the span of posterior mean functions of Gaussian Process regressors. The posterior mean is equivalent to the point estimator for the function in kernel (ridge) regression.

- A similar argument connects the gaussian process expected square error (deviation of the posterior mean from the true function) and the square error in the RKHS point estimate (deviation of the estimate from the true function).

- But when using the representation of the RKHS by the eigenfunctions of the kernel one can show, that draws from a Gaussian Process are not part of the RKHS. Thus the frequentist and probabilistic views are closely related but not the same.

- It can be shown, that there are kernels for which the RKHS lies dense in the space of all continuous functions, i. e. all continuous functions can be approximated infinitely close by element of the RKHS. That means, that Gaussian Process Regressor or Kernel Machines are in principle universal function approximators similar to neural networks. Yet the rate of convergence is not specified by this statement, which still can lead to somewhat "unstable training" or a "convergence" that is so slow (e. g. logarithmic) that it does not converge at all.

- Yet there exist theorems and statements from learning theory which show, that if specific kernels or priors are found, the convergence can be actually pretty decent.

Example of Gaussian Process Regression

- The lecture presents an actual hands-on example on how to apply the framework presented in the last parts, including prior information etc. to infer a future prediction. It is not really practical to try to follow the whole example in these notes, so follow the video (again) if searching for details. There are just some general notes, hints and best practices one can extract from the worked through example.

- It is a good idea to include known quantities in the model, e. g. known standard errors of measure instruments etc.

- It is always a good idea to create some visualizations if possible to get a feeling for the data and as a sanity check.

- Often it is not possible to do reasonably good extrapolations or modelling in general if no further information is included to the model (e. g. via the priors).

- It might be the case, that some "unknown" parameters are actually present in the model, which might be (typically) set to $1$ or $0$. This is for example the case when defining a kernel: it can be scaled arbitrarily and still be a kernel.

- When constructing additional features, one also has to decide for parameter choices.

- When using generative models it might be a good idea to (if possible) draw some samples from the prior before actually fitting the model and compare these with the real data. For Gaussian (Process) Regression this would be sampling from the prior $f(X) \sim \mathcal N (m_X, k_{XX})$. Remember that to sample from this distribution because of the transformation properties of the gaussian distribution on can transform some $Y\sim\mathcal N(0, \boldsymbol 1)$ which is $N$-dimensional via $Z = AY+m_X$ where $A$ is some matrix with $AA^T=k_{XX}$ to get $N (m_X, k_{XX})$ (full derivation by myself on math.stackexchange).

- Adding a small diagonal matrix (e. g. $10^{-9}\boldsymbol 1$) can assure a matrix to be positive definite.

- Linear Combination of Kernels: In the lecture

(also see Rasmussen & Williams, 2006) the overall process $f$ is assumed to be the additive result of sub-processes $f^{(i)}$ and features $\phi^{(l)}$. Each of the processes has its own kernel $k^{(i)}$ and the regression model becomes:

\begin{align*} f(x) = \sum_i f^{(i)} + \sum_k w_k\phi^{(l)} = \sum_i f^{(i)} + \underbrace{w^T\Phi}_{=\Phi^T w} \end{align*}where the weights can be modelled with a prior $w\sim (0, \Sigma_w)$. Together this is a sum of GPs and Gaussians which results in following overall GP for $f$:\begin{align*} f \sim \mathcal{GP}\big(0, k\big) \qquad \textsf{with} \qquad k = \sum_{i} k^{(i)} + \Phi^T \Sigma_w \Phi\, . \end{align*}

- To include $\Sigma_w$ by its own prior might be intractable. In the lecture the approach is to assume

$\Sigma_w = \mathrm{diag}(\theta_1^2,\theta_2^2,...):=\Theta$ which results in the overall kernel to be

\begin{align*} k(\psi) = \sum_{i} k^{(i)}(\varphi) + \Phi^T \Theta \Phi = \sum_{i} k^{(i)}(\varphi) + \sum_k \theta_l^2 {\phi^{(l)}}^T \phi^{(l)} \, , \end{align*}where additional possible hyperparameters are included in the $k^{(i)}$'s, and the set of all hyperparameters $\varphi_i$ and $\theta_i$ is abbreviated with $\psi$.

- The model then proceeds as in usual Gaussian Parametric Regression to model $Y\vert f \sim \mathcal N (f, \sigma^2 \boldsymbol 1)$. The $\psi$'s can be viewed as hyperparameters and using the same calculations as in "Learning Representations" $w\sim \mathcal N \big(0, \mathrm{diag}(\theta_i^2)\big)$ yields $Y\vert \psi \sim \mathcal N (0, k+\sigma^2\boldsymbol 1)$. One can search for optimal hyperparameters e. g. using MLE:

\begin{align*} \hat \psi = \underset{\psi}{\mathrm{argmin}} \big( -2\log p(y\vert \psi) \big) = \underset{\psi}{\mathrm{argmin}} \Big( y^T \big( \underbrace{k_{XX}(\psi) + \sigma^2 \boldsymbol 1}_{=:G} \big)^{-1} y+\log \mathrm{det}\, G \Big) \, . \end{align*}

- The posterior for $\boldsymbol f$ can be evaluated by standard Gaussian Process Regression:

\begin{align*} f_x\vert Y=y \sim \mathcal N \Big(k_{xX}G^{-1}y, k_{xX}-k_{xX}G^{-1}k_{Xx}\Big)\, . \end{align*}

- Posterior estimates of single processes and features: We use the notation $f^{(l)} := w_k\phi^{(l)}$ such that these can be viewed as

\begin{align*} f^{(l)} := w_k\phi^{(l)} \qquad \Rightarrow \qquad f^{(l)} \sim \mathcal{GP}\Big(0, \underbrace{\theta_l^2{\phi^{(l)}}^T \phi^{(l)}}_{:=k^{(l)}} \Big) = \mathcal{GP}\big(0, k^{(l)} \big) \, . \end{align*}To construct the posterior for individual features consider $\mathbf{f} \, = (f^{(1)},f^{(2)},\dots )^T $Explicitly formatted as bold-vector here.. Therefore $\mathbf{f}\sim \mathcal{GP}(\mathbf{0}, K)$, where $K = \mathrm{diag}\, (k^{(1)}, k^{(2)}, \dots )$ is a (block)-diagonal matrix. The Gaussian Process Regression is then carried out by the assumption $Y \vert \mathbf f \sim \mathcal N (\vec 1 ^T \mathbf f, \sigma^2 \boldsymbol 1)$ where $\vec 1$ is the vector containing only ones of suitable size. Formula \eqref{eq:bayes_gaussian} in combination with the diagonal form of $K$ yields:\begin{align*} \mathbf f_x \vert Y=y \sim \mathcal N\big(K_{xX} G^{-1}y, K_{xx}-K_{xX}G^{-1}K_{Xx}^T\big)\, . \end{align*}Note that when doing this one gets a really similar posterior as before. The information that is additionally added here is the independence of processes / features which are the summands of $f$.

- The posterior for single processes / features is the marginal of this distribution, which can be calculated using \eqref{eq:conditionals_gaussian}:

\begin{align*} f^{(i)} \vert Y \sim \mathcal N \left(k_{xX}^{(i)} G^{-1}Y, \ k_{xx}^{(i)} - k_{xX}^{(i)} G^{-1}{k_{Xx}^{(i)}}^T \right) \, . \end{align*}

- Posterior estimates on Feature $\boldsymbol{\phi^{(i)}}$ Effect: Converting a "process" $f^{(l)}$ which was constructed using a feature $\phi^{(l)}$ via $f^{(l)} = w_l^T \phi^{(l)}$, one can transfer the latter formula into a posterior estimate for $w_l$, which can be easily vectorized for multiple $l$:

\begin{align*} w_l\vert Y &=y \sim \mathcal N \Bigg(\, \theta_l^2 \, {\phi_X^{(l)}}^TG^{-1}y, \ \ \theta_l^2 \Big( \boldsymbol 1 - {\phi_X^{(l)}}^T G^{-1} \phi_X^{(l)}\, \theta_l^2 \Big) \Bigg) \, , \\ w\vert Y &=y \sim \mathcal N \Big(\, \Theta \, \Phi_X^TG^{-1}y, \ \ \Theta \big( \boldsymbol 1 - \Phi_X^T G^{-1} \Phi_X\, \Theta\big) \Big) \, . \end{align*}

- To include $\Sigma_w$ by its own prior might be intractable. In the lecture the approach is to assume

$\Sigma_w = \mathrm{diag}(\theta_1^2,\theta_2^2,...):=\Theta$ which results in the overall kernel to be

- Also note that it might be a good idea to store length scales (e. g. for additive features which add

$\theta_i^2k_i$) to the kernel in log scale. The optimization can still easily be executed using the gradient

\begin{align*} \frac{\partial}{\partial \log \theta_i} k= \frac{\partial k}{\partial \theta_i} \frac{\partial \theta_i}{\partial \log \theta_i} = 2\theta_i k_i \frac{\partial e^{\log \theta_i}}{\partial \log \theta_i} = 2\theta_i k_i e^{\log \theta_i} = 2\theta_i^2k_i\, . \end{align*}

- To compute gradients like the one of $\hat \psi$ is is necessary to compute $\partial_\theta G^{-1}$ for a matrix $G$. To achieve this consider

\begin{align*} 0 = \frac{\partial}{\partial \theta} \boldsymbol 1 = \frac{\partial}{\partial \theta}\big( GG^{-1} \big) = (\partial_\theta G) G^{-1} + G \big( \partial_\theta G^{-1} \big) \qquad \Leftrightarrow \qquad \frac{\partial}{\partial \theta}G^{-1} = -G^{-1} \left(\frac{\partial}{\partial \theta} G\right) G^{-1}\, . \end{align*}

- The derivative of $\log \det G$ can be calculated using Jacobi's Formula and for invertible $G$'s it is:

\begin{align*} \frac{\partial}{\partial \theta} \log \det G = \mathrm{tr} \left(G^{-1} \frac{\partial G}{\partial \theta} \right)\, . \end{align*}

Gauss-Markov Models

- The following part is about connecting chain DAGS - $A\to B\to C$ or $P(A, B, C) = P(C\vert B)\cdot P( B\vert A)\cdot P(A)$ - with the notion of Gaussian Processes. These models work on time series.

- Time Series: A time series is a sequence $[y(t_i)]_{i\in \mathbb N}$ of observations $y_i:= x(t_i) \in \mathbb Y$, indexed by a scalar variable $t\in \mathbb R$. In many applications the time points $t_i$ are equally spaced $t_i = t_0 + i \cdot \delta_t$. Models that account for all values $t\in \mathbb R$ are called continuous tie, while models that only consider $[t_i]_{i \in \mathbb N}$ are called discrete time.

- To keep inference (in real time) feasible, a common assumption is, that the next time step is only dependent on the current time step (Markov Chain, see below).

- In a $\mathcal{GP}$-setting the Markov Chain Assumption resembles a kernel matrix, which is of tridiagonal form.

- (Latent) State Space Models: Observations of $y_1,...,y_N$ with $y_i \in \mathbb R^D$ at times $[t_1,...,t_N]$ with $t_i \in \mathbb R$ and assume a latent state $x_i \in \mathbb R^M$ with $y_i \approx Hx(t_i)$.

- Markov Chain: A joint distribution $p(X)$ over a sequence of random variables $X:=[x_0,...,x_N]$ is said to have the Markov property if

\begin{align*} p(x_i \vert x_0, x_1, ..., x_{i-1}) = p(x_i \vert x_{i-1}) \end{align*}

and the sequence is then called a Markov Chain.

- Prediction Step - Chapman-Kolmogorov Equation: Assuming the Markov property for $x_t$ and that the observable state at time $t$ only depends on the latent state at time $t$: $p(y_t \vert X_{0:t})=p(y_t\vert x_t)$ with using the notation $Y_{0:t}\cong y_0,y_1,\dots y_t$ one can show:

\begin{align*} p(x_t \vert Y_{0:t-1}) = \int p(x_t\vert x_{t-1})p(x_{t-1} \vert Y_{0:t-1})\, \mathrm dx_{t-1} \, . \end{align*}

This is called the prediction step, i. e. taking all the data $Y$ up until and not including $t$ and inferring the latent state $x_t$ at time $t$.

- Update Step - Including the next Datum: To include the datum at the time step $t$ one can rely on Bayes theorem:

\begin{align*} p(x_t\vert Y_{0:t}) = \frac{p(y_t\vert x_t) p(x_t\vert Y_{0:t-1})}{\int p(y_t\vert x_t)p(x_t\vert Y_{0:t-1}) \, \mathrm dx_t}\, . \end{align*}

- Smoothing Step - Looking Back through Time: It might also be of interest to retrospectively infer

$x_t$ at a given time $t$ including all the data $Y$ (not just up to $t-1$ or $t$). Using the Markov assumption and Bayes theorem yields:

\begin{align*} p(x_t\vert Y) = p(x_t \vert Y_{0:t}) \int p(x_{t+1}\vert x_t) \frac{p(x_{t+1}\vert Y)}{p(x_{t+1}\vert Y_{1:t})}\, \mathrm dx_{t+1}\, . \end{align*}